A tale of three computers

These are the days of miracle and wonder

I went to look at a new computer today. I know there are more interesting ways to start a newsletter, but this was a really nice piece of kit, impressive enough to start me thinking about progress in technology and about two other computers, past and future.

In his 1999 book The Nudist on the Late Shift, which captures the madness of dot-com era Silicon Valley, writer Po Bronson describes how in late 1997 it became a “very cool thing to do if you were hosting a party anywhere near downtown San Jose … to walk through the Mae”. The Mae was an internet exchange point, “handling, by some estimates, as much as 40% of the nation's Internet traffic”.

Bronson describes the anticlimax of expecting “the clicking and clacking and switching and routing, the loud roar of the Internet”, finding “three gigabit switches, each about the size of a mini-microwave”. There’s a nice illustration of exponential growth and technological progress that twenty-five years on, Sky Broadband promise to provide your own house with a rate of traffic which was half the entire internet back then. But my experience was nothing like Bronson’s, I certainly didn’t walk away disappointed.

The computer I went to see is called Isambard-AI, and it’s located on the outskirts of Bristol. The statistics are pretty staggering: it’s the 11th most powerful supercomputer anywhere in the world, it weighs 150 tonnes, and has over 5,000 GPUs. As you can imagine, all that computing potential requires a battle between power demands and cooling, but despite that the machine claims to be the 4th greenest supercomputer in the world. And as a national resource, backed by £225m in Government investment, it’s going to be solving problems for research groups all around the country.

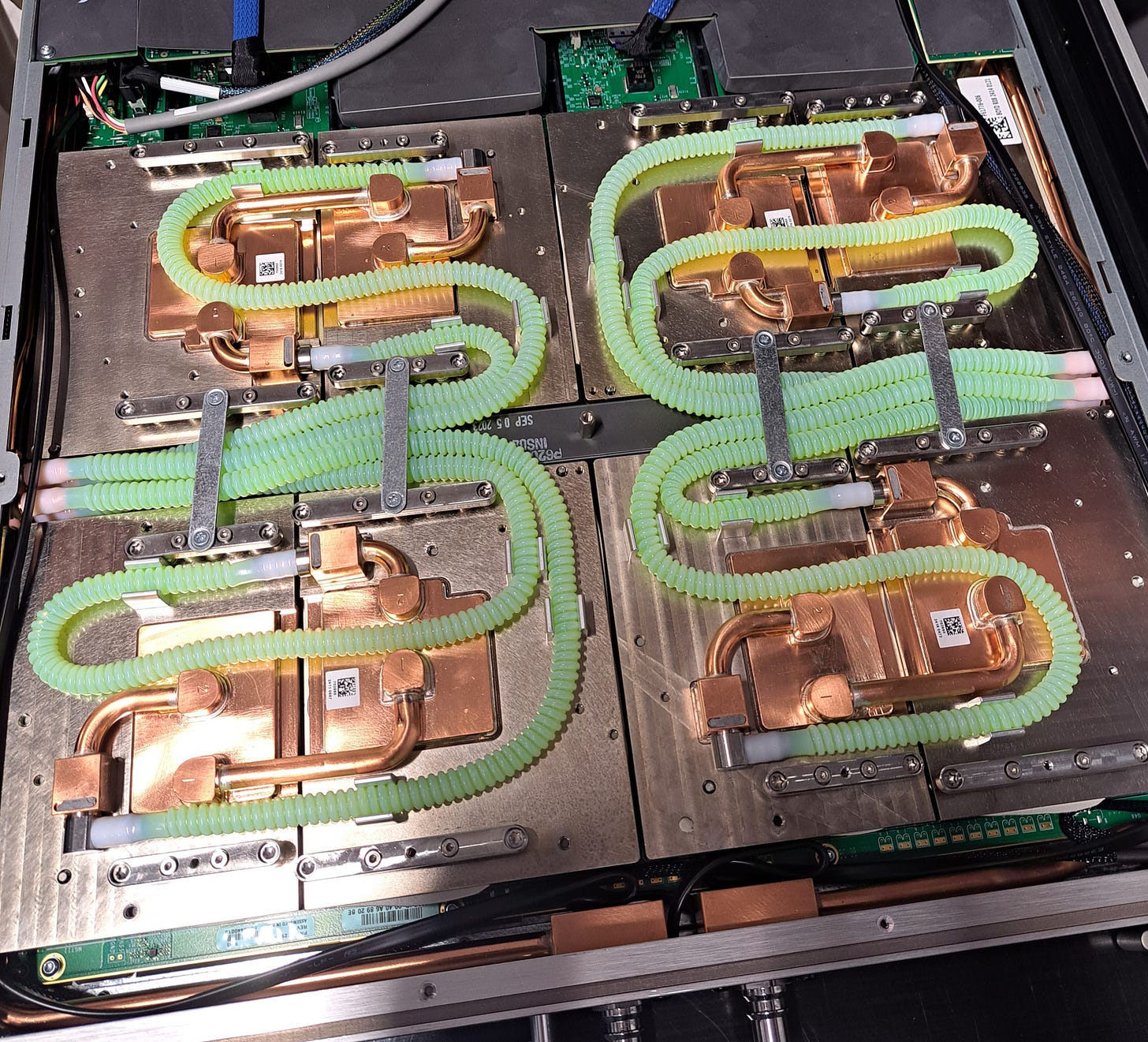

It’s fair to say that the experience wasn’t underwhelming like Bronson’s was. While there wasn’t exactly a roar of computation either, and the room itself was cool enough to feel like a great place to work in the summer, it was hard to ignore the sheer power present. Each of these units with a handle (along with its cooling inflow and outflow) represents multiple top spec GPUs:

and thousands such units are racked up there. But to be honest, it was maybe easiest to grasp the computational power through the infrastructure needed to support it: the power cables the thickness of your arm and the constantly cycling cooling system and heat exchangers and fans outside. As a result, the whole setup almost felt more like the setting for the final boss battle in a Terminator movie than the cathedral of AI that it really is.

I certainly didn’t go away disappointed like Bronson (and thanks to the team there for the tour). But beyond that, the experience got me thinking about computers from a long time ago, and computers yet to come, and about the difference between science and engineering. I’m someone who works in a recently merged Faculty of Science and Engineering, with a mathematical background but having published a lot with engineers, so am probably closer to this interface than many.

I’d tentatively suggest the definition that science tells you how to solve a problem, but engineering tells you how to solve it at scale, thousands or billions of times per second. If you think about it, then you have to conclude that you really need both of these, working closely together - and that hasn’t always happened as it should.

Part of the reason for my setting up on Substack was to archive old Twitter threads, so here’s one I wrote in 2021 about the work of Bill Tutte at Bletchley Park:

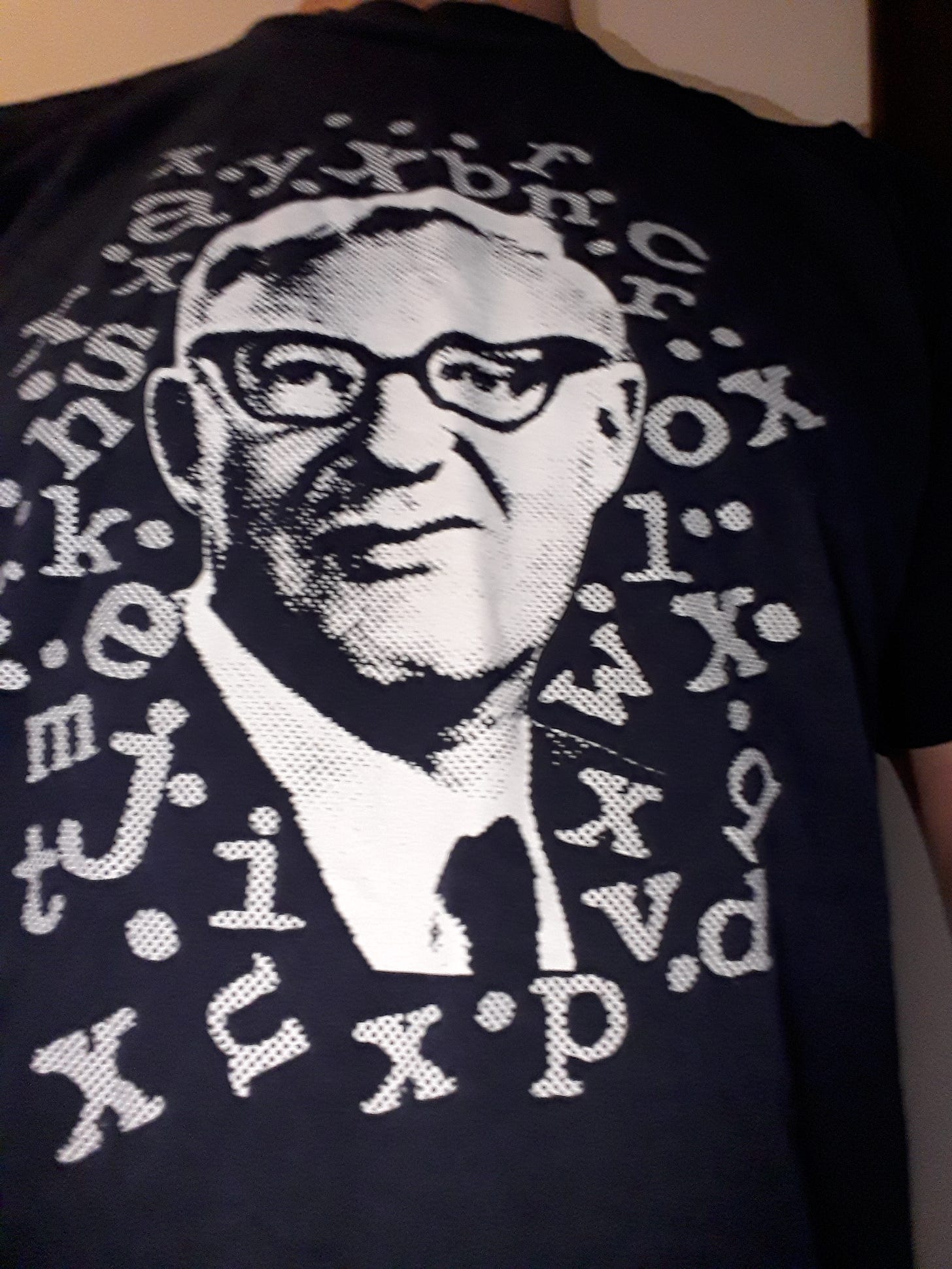

Today's being marked as the 80th anniversary of the Enigma code being broken at Bletchley, as an opportunity to celebrate the value of pure maths, and of course I'm down with that. However, I've got my geekiest T shirt on today and that's not Alan Turing:

Of course the work of Turing (and a whole team at Bletchley, as well as all the other links in the chain) was brilliant and vital. Enigma was fiendishly complicated, and I have no doubt that breaking it (as much via Bayesian stats as pure maths btw) enabled D Day to succeed.

But it's important to remember that Enigma was a development of a pre-war commercial machine. We knew roughly how it worked, and while it was successively refined, brilliant Polish mathematicians such as Rejewski and Zygalski had been breaking earlier versions in the 1930s.

Whereas the machine that Bill Tutte (the guy on my T shirt ) and colleagues broke was a whole other beast. This was Lorenz, used for the highest value messages, including Hitler's personal commands, and we never even saw one until the war was over.

But, simply from the stream of 0s and 1s it produced, Tutte worked out that letters were ever slightly more likely to repeat every 41 symbols, suggesting that there was a wheel of size 41, in a machine he'd never seen.

From this, Bletchley were gradually able to spot other periodicities, and find the size of other wheels. This is amazing. It's something like solving a jumbo Times cryptic crossword without seeing the grid or knowing how long any of the words are meant to be.

In fact, there were twelve wheels of different sizes, giving 43×47×51×53×59×37×61×41×31×29×26×23 or 16 billion billion different start positions. And this was why Tommy Flowers and his team needed to build Colossus, the world's first computer, to routinely break the messages.

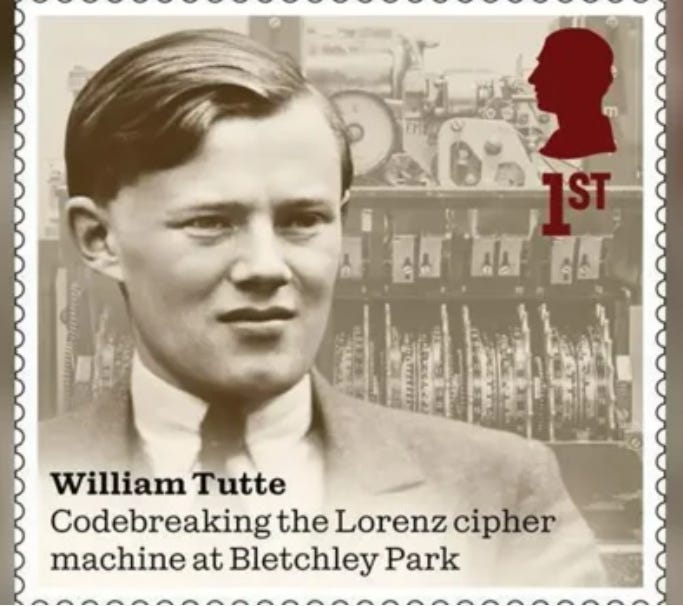

Anyway, today is a good day to remember Tutte, who after the war developed a huge amount of the field of "graph theory", which underlies our understanding of networks, enabling the Google search algorithm and unpicking COVID infection patterns.

I think this thread was right, it was an astonishing achievement of scientific analysis. But as Tutte has started to gain more recognition, for example with a VE Day stamp, Flowers remains more obscure to the general public.

So, while that thread focused on Tutte, visiting Isambard-AI also got me thinking more about Flowers. Partly thanks to films like The Imitation Game and Enigma, and reading about characters like Old Etonian papyrologist (and John Maynard Keynes’ ex) Dilly Knox making huge contributions to the code breaking, it’s tempting to think that the whole place must have been recruited from Oxbridge High Tables. (Indeed in my time on High Table in the early noughties, there was at least one Bletchley veteran there).

But Flowers was different. He’d come from a poor background, earned his Electrical Engineering degree via night school, and worked for the Post Office. Nonetheless, it was exactly that experience and his vision to build a computing device out of valves which led to his construction of the Colossus computer. Eighty years before Isambard-AI, albeit with a fraction of its power even on a log scale, it’s still possible to draw a line back to it.

Further, it’s tempting to wonder if there was a Sliding Doors moment when the UK lost its chance to lead the electronics revolution worldwide. Colossus was dismantled after the war, and since Flowers had personally paid for many of its components, his compensation of £1,000 apparently left him out of pocket for the experience. It’s hard not to think that if Flowers had been a Cambridge undergraduate from a famous old school then he might have received better treatment. And while he has received more respect during the last ten years from those in the know, his achievement in automating Tutte’s solution of the Lorenz cipher problem probably isn’t anything like as well known as it should be.

I’ve talked about the past (Colossus), and the present (Isambard-AI) - albeit a supercharged version of what is possible now. But what of the future? While Moore’s Law has given the roadmap of repeated doubling of performance for far longer than it had any right to, at some stage it has to stop. There are physical limits in terms of miniaturization, and so for progress to continue we might need new technology rather than just more and more speedier and speedier silicon.

In fact, we’ve had a pretty good blueprint for the last 30 years. Since Peter Shor published his factoring paper in 1994, we’ve known that a powerful enough quantum computer could potentially break all internet encryption by factoring big numbers into primes. However, progress hasn’t always been great. As recently as 2019, people were discussing whether it was possible to factor 35 as 7x5 with a practical quantum computer - which is very much a problem in the range of a switched-on primary school child.

But things are gradually starting to change, and that’s where my third computer comes in. The reason that building a quantum computer is hard is that it’s necessary to control so-called qubits, which can be as small as a single electron. People argue about how many of these you might need, but numbers like a million aren’t off the table. It’s not hard to imagine that, like a magician keeping an eye on spinning plates, controlling the spins of a million different individual electrons might be … challenging. Things are going to go wrong, errors are going to creep in.

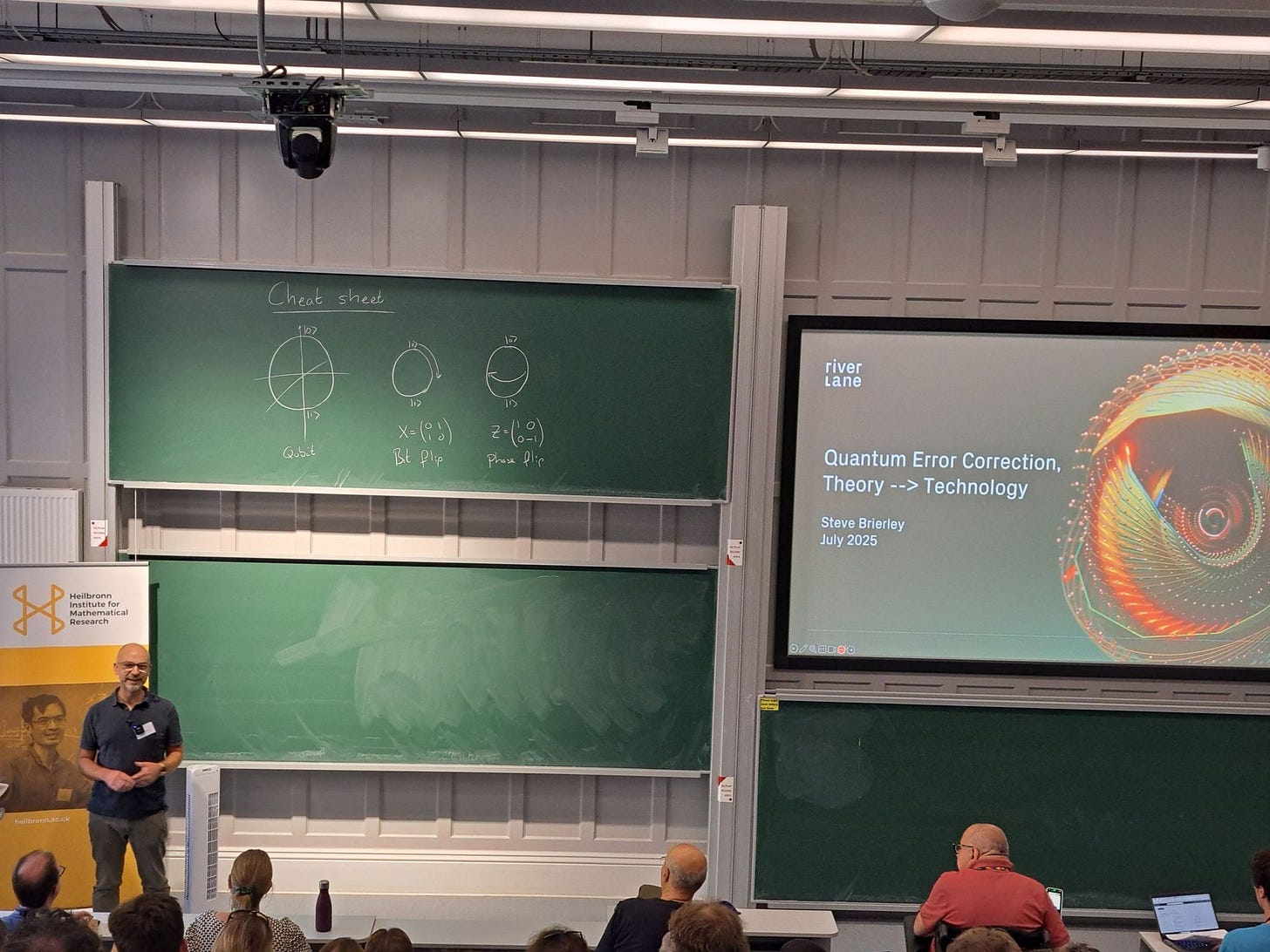

However, just as it is possible to spot and to correct errors in sequences of bits, it’s possible to do the same in this quantum situation. But as Tutte and Flowers showed, it’s one thing to know that something is theoretically possible, it’s another to implement it at scale. But last week I went to a talk by my former colleague Steve Brierley, now CEO of quantum error correction company Riverlane, which convinced me that things are on the move.

The technical details are over my head but there’s a paper on the arxiv, which is always a good sign. As far as I could understand from the talk, the idea is to have a lot of redundancy between qubits, in such a way that errors in particular places produce a particular signature (via something called a surface code). And then Riverlane’s algorithm can take the signature and locate and correct the error.

Just as Flowers’ valves could find the settings of the Lorenz machine sufficiently fast to break Hitler’s code before the messages were out of date, the key thing is that this algorithm now lives on a (classical) chip. Once again, the maths of how to solve the problem feeds into the engineering of how to do it fast enough. Being able to solve the problem in microseconds stops errors propagating through the system, and means there’s a chance to solve the hard problems we care about - and they have a chip which will help you on your way.

Like I say, the details of the science are beyond me. But even if I’m not the right person to ask, I can certainly point you at the nitty gritty of their roadmap, in the engineering terms of what kinds of chips and why. We’re probably still a long way off a quantum computer literally breaking the Internet, but it was certainly possible to believe that one day a solution might involve racks of Riverlane chips lined up like the Isambard-AI ones.

And at the very least, as someone who these days spends a lot more time in meetings and a lot less time in talks than I’d like, Brierley’s talk was certainly a memorable one, and it was great to see such a compelling vision of a future which almost seems like science fiction.