In case you’re wondering what I do all day, I’m going to take a departure from COVID, to talk about my actual job. Today (21st November 2022) I gave a symposium talk, and the first half was a non-technical introduction to Information Theory, which is the area of maths I spend my time researching, so I thought it might be fun to share those slides here. (Apologies for the lack of accessibility from not reproducing the words as alt-text).

Slide 1: just sets the scene. The photo is one I took in San Jose, when I visited for a workshop - it’s the two issues of the journal where Claude Shannon’s masterpiece was published back in 1948.

Slide 2: introduces the idea of a source of randomness, and mentions the idea that some sources are more random than others, and that this is somehow to do with predictability.

Of course, you can argue whether say someone typing really is random, because they presumably have something they want to say, but from the point of view of a mathematical model you can pretend that they are. For example, maybe there’s a set probability for what the next word is, given the previous word?

Slide 3: introduces Shannon’s idea of information. It’s clear that your view of the world changes more because of surprising events (if you hear that Manchester City won the league again, you probably shrug, if you hear that Leicester did, it might require you to re-evaluate a lot of things).

And (of course, this is me!) the right function to use to capture this is the logarithm. In fact in a sense, it’s the only function with the desirable property that learning two independent events (“Manchester City won the league, and I just rolled double six") makes the total information gained be equal to the sum of the information I would learn from each of these things alone.

Slide 4: introduces the idea of entropy as the amount of information that I expect to gain from my source. The key trade-off is that rare events bring lots of information when they happen, but by definition they don’t happen a lot. So, with the benefit of a worked example, we can start to believe that a process with equally likely outcomes is most unpredictable, and has biggest entropy.

Slide 5: summarises Shannon’s first big result, about compressibility. We might like to store the output of the source as efficiently as possible - think of zipping a document before you email it, to remove redundancy. Shannon showed that there is a limit to how much space this takes - there is an amount of “stuff” in the file, beyond which you can’t compress any more. Further, this limit is exactly the entropy! So, the outcome of predictable sources can be compressed more than unpredictable ones.

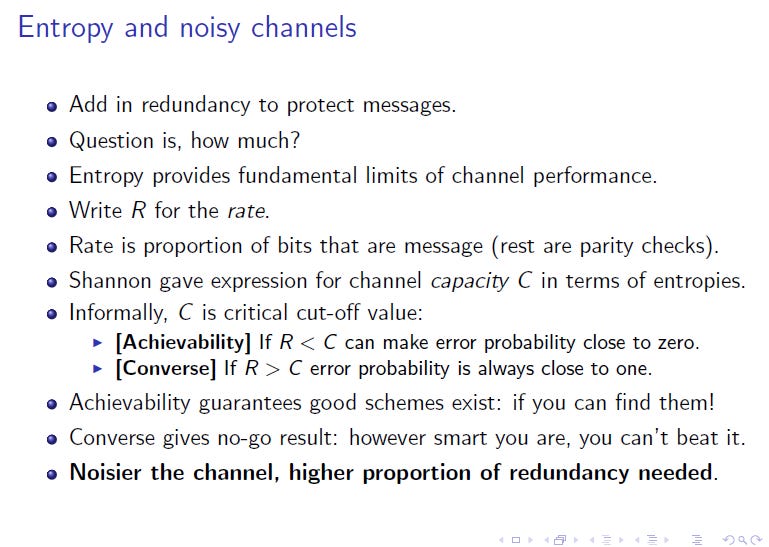

Slide 6: introduces the idea of a noisy communication channel, like the one between your mobile phone and the mast. Think of interference caused by other mobile users, or your signal bouncing off buildings on the way to the mast. This can be captured in a fairly general mathematical framework, where what you transmit and what you receive aren’t necessarily the same, but are governed by some kind of random process.

Slide 7: described the key theoretical result for noisy channels. That is, we can protect messages against noise by padding them out with redundancy (in a way that is the opposite of the compression ideas of Slide 5). Another of Shannon’s big theoretical contributions was to show that the amount of redundancy required can also be expressed in terms of the channel capacity, which in terms depends on entropy-type quantities.

The rest of the talk considered more modern developments, such as the fact that:

The capacity result really only holds for very long messages, which may not be practical for scenarios like low power sensors. The results need to be adjusted accordingly.

Other problems such as group testing (pooled testing) can be usefully understood in an information theory framework. (Lots of my recent work has been on this problem).

We may not care about recovering the exact bits of the message, but rather just its meaning (“this is a picture of a dog”). How does this affect the performance of the system, and does it leave us open to adversarial attacks?