Learning from error

I get knocked down, but I get up again

A few months ago, some people published something which found a counterexample to one of my old papers. The work was nearly twenty years old, I hadn’t really thought about that topic for a while, and I was extremely busy at the time and so I couldn’t be sure straight away. However, it seemed like they’d found an example where my theorem failed, which meant there must be a mistake in my proof.

Immediately I wrote to the editor of the journal, crediting the people who found the error, to draw this issue to their attention and to ask about the right procedure for a retraction or a correction (some parts seemed correct still). When I did find some time, I went back through the paper and checked it carefully. However, with some relief, I gradually came to realise that my work was actually fine (the other people had misunderstood some of my notation - I possibly could have made it clearer), and so nothing needed to change.

However, this isn’t a story about me being right: it’s a story about me being potentially wrong, and how to deal with that. It’s not a nice feeling to think that you might have made a mistake, and honestly it might have been tempting to duck the issue. But the way that science should proceed is by people reflecting on what they’ve written where necessary, and making appropriate adjustments to their own work.

In the same way, it’s important to emphasise that there were lots of times I was wrong during the pandemic. For example, I expected vaccination to be more of an “immune and you’re done” thing than it turned out to be, and expected that vaccinated people wouldn’t transmit the virus. (Vaccines are still great in preventing severe consequences though). I was probably too optimistic in the early days of the Delta variant and too pessimistic in the later days of it. I’m sure there are others too.

In that sense, I want to make it clear that I don’t regard having been wrong about something as disqualifying. I’ve said it many times before, but it’s exceedingly unlikely that anyone even got all the main calls right, let alone being correct on every detail. If we got rid of everyone from the discussion who’d made mistakes there would be nobody left. That’s fine, we learn from our mistakes and move on.

Rapid responses

So, anyway, this was the spirit in which I wrote in to the British Medical Journal this week. There’s been an article from a member of Independent SAGE calling out other peoples’ mistakes in the pandemic, so I made a rapid response pointing out a couple of places where I felt they’d been wrong about school closures and asking for them to think about those:

In my view, learning the lessons of the pandemic requires all those who played a role in it to reflect on their positions. The question of whether Independent SAGE were right to argue that children should not receive in-person education at what was to prove the lowest prevalence level of the entire pandemic [4] should not be an exception to this.

This … did not go well. My response got a response from the original author saying my “criticisms are misleading”. Someone else wrote in to say that I “wanted children to get infected”, which was nice. The chair of Independent SAGE took to Twitter to say

Relentless attempts to polarise debate around COVID mitigations are incredibly frustrating. It's not "do", or "do not", it's DO IT SAFELY!

I’m not planning to write back, because it feels pointless playing this particular game of Calvinball. But it’s still frustrating to me that, four years on, we are having this argument. In terms of DO IT SAFELY, my view is that back in June 2020, we had a binary question: should schools be open or not? It’s fine to disagree about the answer to that, but you can’t suddenly invent a third option.

It reminds me of people on Location Location Location who can’t decide between the big house by the main road and the little house in the great location, and who say “I just wish we could just get the big house in the great location for the same price”. That’s not an option!

Sadly, as anyone who has been on university committees (or has read Cornford’s Microcosmographia) will know, this is how academics tend to argue. We don’t say outright that we disagree with something, or we shouldn’t do it. It’s just that now is not the time, we shouldn’t rush into a decision, it merits more study to explore other options, we should form a sub-committee to report back to Governing Body next term.

Of course, those of us who wanted schools to be open wanted them to be open safely. We’re not monsters. We are just realistic about what was and wasn’t available at the time. The idea that if only we’d waited a couple of weeks longer then we would have had a Test and Trace system which could keep infections under control seems wildly optimistic.

And even if we’d followed Independent SAGE’s own guidelines it’s not obvious it would have meant that we would DO IT SAFELY. I’m sure they’d say now that the answer was masks and ventilation, yet their own document at the time mentions the word “mask” only five times, all in the context of listing other countries’ approaches. Meanwhile they went big (like we all did!) on the need for hand washing and hand sanitiser (see the WHO checklist on P33).

But this is all pointless. As this back and forth has shown, people can in good faith disagree about the meaning of words and the best way to implement things. But, there are other examples which are worse than that, and which are closer to my theorem story above.

Methods

Nearly two years ago (on 12th September 2022) I wrote a Twitter thread highlighting what I believed were serious mathematical errors in the May 2020 Independent SAGE report, meaning that the claimed risk to children was significantly exaggerated. Despite the fact that people from that organisation followed me there, nobody ever replied, either to agree or to disagree. For the sake of completeness and archiving, the thread is here, in italics, with footnotes to mention any more recent comments:

Since we are apparently relitigating the past ready for the Public Enquiry,1 who wants to read about how Independent SAGE's May 2020 Report on Schools overestimated the chances of a child being infected in the classroom, conservatively by a factor of 30, perhaps much higher? 🧵

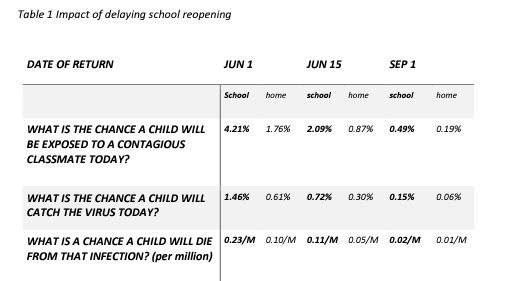

The report is here. On page 7, based on a class of 15, they estimate the chance that a child will be infected *today* at then-current prevalence levels was 1.46%. That sounds bad! A small percentage of a big number is a big number! We ought to check that.

So first, they estimate the chance a child will be exposed to a contagious (sic) classmate is 4.21%. They don't say how they get there, but I reckon it's something like this: if prevalence is 0.3%, the chance nobody else in the class is infected now is (1-0.003)^14 or 96%.2

I think this overestimates the proportion of classes with an infectious child in for at least three reasons:

The prevalence estimate is far too high. ONS tells us it was actually 0.06% at the time, so that would suggest (1-0.0006)^15 or 99.1% of classes would be infection free3

So naively, 0.9% of classes would contain an infected child. But ..

We know that ONS is based on PCR positivity, and we know you will test positive that way for much longer than you are infectious. So naively applying ONS overestimates the infectious population.

The calculation assumes that all infectious kids will come to school as normal. But at the time, if you had symptoms you had to stay at home. Even if you lived with someone who had symptoms or a positive test, you had to stay at home.

So, I think 0.9% is a big overestimate. We can argue about how much effect 2. and 3. have, but I think conservatively at least half of PCR-positive kids wouldn't have been in school and infectious. So call it 0.45% of classes with an infectious kid in, being generous.

OK, so I think they've overestimated their first number by a factor of 10 or so. The second calculation says that there being a 4.21% chance of someone in the class being infectious gives each child a 1.46% chance of being infected *today*.

But those are really scary attack rates! It's saying that each infected person will infect 1.46*14/4.21 = 4.85 people *every day*. Even if you think an infectious person is in school for only 3 days (because weekends intervene), in that time they'd infect 4.85*3 = 14.6 people!

Remember that this is original Wuhan strain, so maybe R=3 on average. That is, each person infects 3 more people on average *across their whole infectious period*. Even if you think classrooms are spreadier places than normal, do you believe they are 5 times spreadier?

And that's assuming that none of the 3 infections happen anywhere else (and the report itself acknowledges that even not going to school you'd pick up infections).

So, again, being generous, if we imagine that each infectious school kid infects 5 classmates *in total*, that's about 1.66 per day, or a third of ISAGE's 4.85.

We can definitely argue about the exact numbers, but if you think they've overestimated the proportion of classes with an infectious kid by a factor of 10, and overestimated the spreadiness by a factor of 3, that's the factor of 30 I suggested at the top of the thread. /FIN

It’s possible I’m wrong. These are best effort calculations and of course rely on some assumptions. But I set those assumptions out clearly, and I still think it was a serious enough effort that it deserved a response.

Impact statement

The main reply that I got (not from Independent SAGE members themselves, but from their supporters) is that actually none of this matters. The argument goes that it was SAGE advising and the Government setting policy, and that this report actually had no effect.

I’m not convinced by this for two reasons. Firstly, clearly it was the intent to influence debate. You don’t go to the trouble of forming an organisation, hiring PR consultants, writing a report, going on the TV, writing for newspapers, tweeting, holding online events and so on just for the fun of it. Even if it didn’t have an effect, it’s not unreasonable to judge by what the effect was clearly meant to be.

Secondly, and again I’ve had pushback from this, my view is that this report was influential. For example, there were close links between the teachers’ unions and Independent SAGE. For example the NEU used the report to argue that schools should not be open. And perhaps as a result, despite Government policy, many schools did their own thing:

Thousands of primary schools in England have defied the government’s call for schools to reopen amid concerns that coronavirus is still too prevalent to do so.

and I don’t think it’s unreasonable to ask what role this report played in that. That’s not me attempting to polarise debate, that’s trying to achieve some kind of closure on all this. But from what I’ve seen this week, I don’t think that’s ever going to happen.

Anyway, that’s more or less it from me. As I’ve mentioned before, I’m starting a new job as Head of School on Thursday, and I’m going to have a lot less time for tweeting and Substacking. I’m not saying “nothing new from me”, but it’s as probably as good time as any to draw a line under some of the pandemic stuff, and to move on. But if you found this one or anything else interesting, then do please share it more widely.

Thank you for reading.

Ok, it should be Inquiry, I can’t spell.

Elsewhere in the report it does confirm that this was exactly the calculation they were doing, I missed that at the time.

You can argue what the right prevalence figure is, and what was known at the time. But for example the ONS infection survey had estimated that 0.27% of the population were infected at the start of May, and Independent SAGE’s own report says that prevalence was halving every two weeks, so 0.06% at the end of May was entirely predictable based on their own numbers.

Thanks for all the posts and tweets! And most of all showing people that one can argue scientifically without getting bound up in personal pride. I have come across other scientists in this space who are the absolute opposite, which is an utterly weird experience. I envy your students (apart from all the learning stuff obviously, once was fine).

Thank you for all the tweets and posts; all the best with the new job.